Ed tech companies promise results, but their claims are often based on shoddy research

The Hechinger Report

MAY 20, 2020

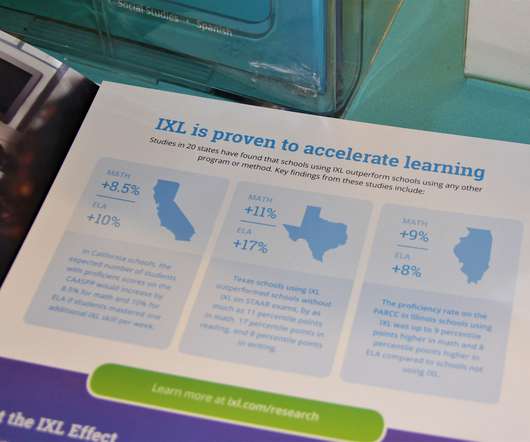

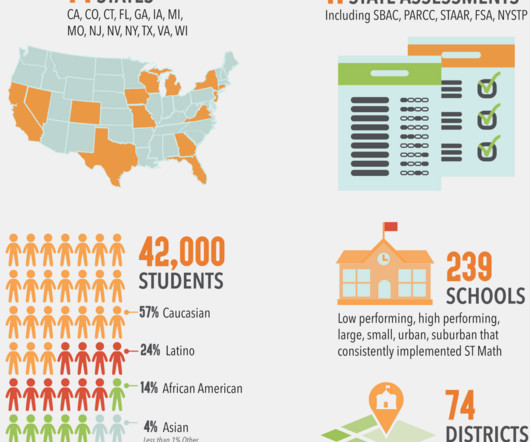

Examples from The Hechinger Report’s collection of misleading research claims touted by ed tech companies. All three of these companies try to hook prospective users with claims on their websites about their products’ effectiveness. Some companies are trying to gain a foothold in a crowded market. Video: Sarah Butrymowicz.

Let's personalize your content