Grading in my Discrete Mathematics class: a 3x3x3 reflection

This article originally appeared back in December at Grading for Growth, a blog about alternative grading practices that I co-author with my colleague David Clark. I post there every other Monday (David does the other Mondays). Check the end of this post for some extra thoughts that don't appear in the original.

Click here to subscribe and get Grading for Growth in your email inbox, free, once a week.

As our semester comes to a close, David and I will be giving retrospectives on how things went for us in our courses, particularly our grading setups. I usually do this on my main blog in the form of a "3x3x3 reflection" where I give three things I learned, three things that surprised me, and three questions I still have. Here's the one from Winter 2021 for calculus and here's the one for modern algebra. I usually take a broad view on these reflections, but this time I'm going to concentrate just on grading.

This semester I taught two sections of Discrete Structures for Computer Science 1, an entry-level course for Computer Science majors on the mathematical foundations of computing. Nearly all the students are CS majors. There were 24 students in each section. Here's the syllabus. What you need to know about the assessments and grading are:

- Basic skills that were important to master were given as 20 Learning Targets. Eight of those are considered "Core" targets that every student needs to master.

- There were ten Weekly Challenge problems sets consisting of application and extension problems. Here's an example; here's another.

- We also had Daily Prep assignments to get ready for class. Here's a typical one. Each had two parts: One to be done before class, then another done as an in-class group quiz.

- Everything was graded on a two-level rubric using the specifications in this document. Daily Preps were two-part assignments worth 1 point per part. Weekly Challenges were graded Satisfactory/Unsatisfactory on the basis of correctness and quality of explanations.

- Learning Targets could be assessed in different ways: Through regularly-scheduled quizzes (here's an example), oral quizzes done in office hours, or through making a short video of a custom-assigned problem. Students were marked as "fluent" on a Learning Target if they produced two successful demonstrations of skill on the target through a combination of those means.

- Pretty much everything could revised and resubmitted somehow, with no penalty. (Although, not without restrictions; for example only two Weekly Challenge revisions per week were allowed.)

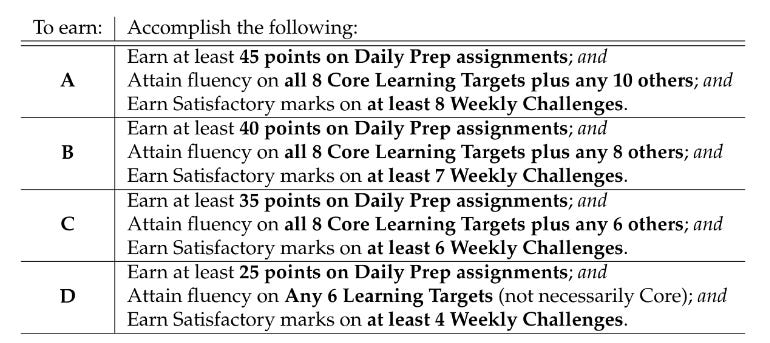

The base course grade was assigned using this table:

Three things I learned

1. Simpler really is better. My grading systems in my Winter 2021 courses were too complicated. So, I went back to the one time since 2015 when I felt I got the grading system close to right and essentially mimicked it, since it is still the only iteration of specs grading I’ve ever done that felt easy to explain. It kept the grading focused just on two main branches: basic skills (done via Learning Targets) and applications of the basics (done through Weekly Challenges) The students and I still encountered issues with this, sometimes because I had simplified too much and removed some needed policy or nuance. But compared to what I'd been using in classes since 2017, this felt a lot easier to manage, and students --- once they got used to not having points or partial credit --- often agreed (but not always!).

2. Timed in-class assessments just aren't worth it. The class was taught in-person, the first time I’d taught with all students present since March 2020. I felt, perhaps like a lot of others, that one of the great benefits of being back in person would be the ability to give timed, supervised assessments (Learning Target quizzes, for me) in class, ostensibly to cut down on cheating. And it certainly was a controlled environment: No notes, only minimal tech, and lots of supervision. And I sort of hated it. With a significant subset of 20 Learning Targets to master, and two successful attempts required for fluency, it wasn’t long until there were too many problems for most students to do in the time that was available. I didn’t like students having to make strategic decisions about which targets to attempt and which to avoid on a quiz. Students felt like they had to do everything, every time there was a quiz. It wasn’t optimal.

Covid-19 actually came to our rescue. Shortly after the Thanksgiving holiday, several students tested positive or were forced into quarantine due to close contact, making in-person quizzing problematic. So I shifted all remaining Learning Target quizzes to a do-at-home format. I posted quizzes on the LMS the day we’d scheduled them, and students had until 11:59pm to complete them — working the solutions out on paper, then scanning each Learning Target to a separate PDF and uploading to some assignment folders I’d set up. I removed all restrictions on technology and notes — how was I going to know if someone used a computer? — but in exchange, the criteria for “success” were rewritten to require more explanation than usual.

This worked out really well since students got access to helpful resources and a break from time pressure, and I got more explanations of reasoning which led (in my view) to better data about student learning. Next time I’ll just start this way and keep it there.

3. It helps to think of different grading approaches as tools. Specifications grading, contract grading, ungrading — there is no one kind of grading that’s best. In my class this semester, I used a mix of different types. The Learning Targets gave it a standards-based grading flavor, while the Weekly Challenges were basically ungraded, and I included an ungrading-inflected approach to the course grades where I asked them to say what they think they earned in the course and then back it up with evidence. Rather than cocoon myself in any one approach to grading, I’ve learned that it’s best to think of grading as solving a problem — What’s the best way to know whether and how much my students have learned and grown? — and then pick the right combination of grading tools for the job.

Three things that surprised me

1. The extent to which communication was a burden to students. This isn't a faux-polite way of saying I can't believe how bad students are at reading email, although that was an issue, and it isn't a swipe at students' writing or speaking skills. What I mean is that communication itself was work for students in a way that's different from past cohorts. Some students talked about how the very idea of social interaction was tiring and difficult for them, having spent a year doing college or high school online with minimal back-and-forth with live humans. Others had great internal struggles with emails and discussion board posts, owing to their bad experiences in online school. I knew information overload was an issue with my students, but I don't think I grasped how severe it was until now.

2. How ready students were to promote this grading system to others. Not all of the students liked what we were doing. But a lot did, and their vociferousness caught me by surprise. I found this out around week 8, when I was giving a talk to my colleagues in Computer Science about what we were doing in the course. It seemed like a good idea to include the student voice, so I gave a survey with the question: What do you want your CS profs to know about the grading system in this class? I was blown away by their responses --- this may be a future blog post from me --- which included gems like this:

Proponents of a traditional grading system may argue that preparing for a test is a part of the grade. In other words, if I failed to iron out my misunderstandings prior to an exam, that should be reflected in the grade and I'll just have to hopefully do better next time. If students get several chances to fix their mistakes, then everyone will get an A eventually, what's the point? What's funny, is that is the point.

3. The extent to which this system deepened an important divide between students. I only have a preliminary tabulation of the course grades right now, but it's the most striking bimodal distribution I have ever seen, with a large number of D grades and an almost equally large number of A's. All the students are hard workers; some of the self-described "bad students in math" are getting high grades while others with a deeper pedigree in math courses are failing. So what's making the difference? I’m not sure, but it seems like there's a divide between students who are skilled with processing information and handling feedback, and those who aren't. This might be making the difference in the grades, and the grading system might be deepening the divide. I note that among students with low course grades, many of those procrastinated on turning in Weekly Challenges until the last 3-4 days of the semester; or missed the same problem on a Learning Target quiz in the same way 5-6 times in a row over a 5-6 week period. But this never happened among the students with higher course grades.

So I need to sit down and think about the extent to which this system is measuring learning, versus how much it measures a student's time or information management skills. The two are related, but not the same.

Three questions I still have

1. What about ungrading? I read Susan Blum's Ungrading book over the summer and it left my mind buzzing. I wasn't prepared to go all-in with ungrading in the class that's just concluded, but I did incorporate some of the ideas in Weekly Challenges --- no points, just feedback. Then at the end, I gave a final exam that consisted of several reflection items, including this:

Pretend there were no guidelines in the syllabus for calculating your final grade. Based on the work you have done all semester and the stated goals for the course, what grade do you think you have earned?

I promised students I would read every word they wrote, and if they could present evidence of learning that suggested they'd earned a higher grade than what the syllabus said, I'd give it serious consideration. This, to me, seems to be the end goal of ungrading: The student and instructor negotiate a course grade, based on evidence of learning, that each is happy with. (I could be wrong about that, remember I'm new to this.)

The results were mixed. In one section, only one or two students gave themselves a grade higher than what the syllabus indicated, and they gave sound reasoning and evidence to back it up. But in the other section, several students gave themselves grades much higher than the syllabus and had no evidence to support themselves and in fact overlooked or outright misrepresented their work.

So on the one hand, I'm encouraged by the ungrading mini-experience because it allowed me to use the specifications grading system I'd set up as a guide but not as an immutable law of nature. I liked this a lot, and it gives me some hope as I prepare to scale way up with ungrading next semester (see below). But on the other hand, I can see that you cannot just run a class with a specifications grading system and then ask students to give themselves a course grade. There has to be a lot of 1-1 discussion and calibrating of expectations along the way. Ungrading in other words is more than just a flavor of specifications grading; it operates from a set of assumptions and beliefs about students that is quite different from anything else.

2. How to get past the misconception that specs grading is "all or nothing"? The idea that specifications grading is, quote, "all or nothing" dogged us all semester. It came up early, made an appearance in a midsemester focus group, and showed up on the final exam responses. We talked about this in class, and how a two-level pass/no pass system coupled with a strong revision and resubmission setup was better for learning than one-and-done assessments with partial credit. We also talked a lot about how pass/no pass does not mean “all or nothing” — “pass” does not mean “flawless”. But a good meme is hard to overcome, and many students became discouraged in their work because of the “all or nothing” idea. It's a branding issue and I might need a marketing department to get me out of it.

3. How to communicate with students in a way that gets heard? Alternative grading systems need a lot of communication. You can't always do this communication in person, and it's often better to have an email or course announcement so there's a searchable, permanent written record. But there's a balancing point between frequency of communication and quantity of information given out, that has to be struck. Post one course announcement per week with everything you need to know in it, and it gets so long and involved that people will start skipping it. Post several short announcements per week to minimize length, and it feels like so many emails that you can't keep up. We really struggled with missed communications this semester, and I never found that balance. This is something I've simply got to figure out.

What's next

Next semester I'm returning to Modern Algebra, but with two major changes:

- I'll be conducting the course using inquiry-based learning (IBL), and

- It'll be full-on ungraded.

These are both big leaps for me. I’ve never done a whole course ungraded; and while I have done IBL before, the one time I tried it 15 years ago was a dumpster fire. My biggest concern going into the semester is simply whether I am good enough, as a teacher and as a human being, to be able to pull off such a radically student-centered approach that lets go of the meticulous planning that I tend to do and follows the student instead.

But my esteemed co-author David Clark has evangelized me into trying both of these — because why do things in moderation? And also, I benefit from David being just down the hall from me (although he’s on sabbatical next semester) and he is fresh off of using this approach in his Geometry class. He'll be describing how things went in that class next, and I'll be reading along with the rest of you, looking for clues.

Bonus extra thoughts

- I don't always actually read the student perception data on a class after the semester is over, but this time I did, because I needed to talk about it in my annual activity report. I was dreading it. The class almost fell apart at the end, a combination of procrastination from students, a failure on my part to keep current with students and give them warnings when things were falling behind, and then confusion about course policies designed to make the end of the semester relatively calm. For example a student didn't grasp the distinction between "Core" Learning Targets and ordinary Learning Targets and didn't have enough Core targets to pass the course. Another student didn't understand the deadlines for submitting revisions and kept asking to submit more revisions late into the course, even halfway through final exam week. It was a shared failure and the stress level was exponentially higher than it needed to be.

- But the student perception data were surprisingly some of the best I've had in years. I'm not sure why. Maybe it's the "latest and loudest" effect, where you only hear the small group of students who aren't thriving and therefore putting the harshest criticisms into their reviews. Or maybe those students were so busy putting in revisions through finals week that they didn't have time to turn in the evaluation.

- Nonetheless, a big lesson I take away from this course is the need to radically simplify. I wrote about this recently on an enterprise-level scale, but I need to do it myself in my own courses. As I've now completed five weeks of an ungraded course, the simplicity of ungrading is compelling. There are no Learning Targets; no Core or non-Core targets; no weekly assessments; just work that students can do to demonstrate mastery and engagement, and they pick and I evaluate. But that's for an upper-level proof-based abstract algebra course; I am thinking a lot about whether and how well this would fit in a more basic class like discrete structures. Whatever the answer, I've learned there's no special prize for devising the cleverest grading system.