The age of AI has dawned, and it’s a lot to take in. eSpark’s “AI in Education” series exists to help you get up to speed, one issue at a time. AI hallucinations are next up.

We’ve kicked off the school year by diving deep into two of the biggest concerns about AI: bias and privacy. Both of these are important to understand and safeguard against, but they require some understanding of what’s going on behind the scenes to truly understand their impacts. Hallucinations, on the other hand, are so blatantly in-your-face that even the most novice digital citizen can see the problem once it’s pointed out.

Let’s take a look at what hallucinations are, what kinds of problems they can cause, and—as always—what you can do to prepare for and mitigate the impact they might have on your classroom.

What are AI hallucinations?

Hallucinations are specific to large language models (LLMs) like ChatGPT, Google’s Bard, Bing, and others. They fall on a spectrum ranging from almost-imperceptible inconsistencies to major factual errors and disinformation. It can be very difficult for people who are not already subject matter experts to separate facts from hallucinations, as most LLMs tend to respond with the same level of confidence whether they are right or just making stuff up.

Hallucinations can be broadly classified into three categories:

1. Contradictions

If you spend enough time talking to an LLM, you’ll notice that it’s not always consistent with its responses. Researchers and internet sleuths have been prodding the various models with moral dilemmas, Socratic dialogue, and politically polarizing prompts for months now to see how they respond, and it has become clear that the technology is not always able to reconcile the natural discrepancies in its training data.

In one hilarious example, a Yale student shared an exchange that ended with the following statement from ChatGPT:

“When I said that tequila has a ‘relatively high sugar content,’ I was not suggesting that tequila contains sugar.”

While the author of the above post couldn’t help but wonder if he was being gaslit by the language model, this is a good example of how LLMs often double down on their mistakes rather than admitting fault and reconciling the contradiction.

In another, more formal study, two physics teachers gave ChatGPT a relatively simple question related to the force of gravity, only to have it return a response that was not only wrong, but contradicted itself in multiple places. A lengthy back and forth did not resolve the issue, as ChatGPT was unable to recognize its own inconsistencies.

This is another stark reminder that LLMs are only as good as the data they’re trained on. As the data set gets larger and/or less reliable, contradictions are inevitable. For example, ChatGPT pulled data from Reddit as part of its training set. Anybody who has ever used Reddit can attest to the fact that it is not exactly a great source for consistent, factual information at scale.

2. False facts

While LLMs can be extremely persuasive, they are not always truthful. If you thought disinformation was a problem before, just wait until we have to suffer through our first AI-powered election cycle. The three most popular LLMs, ChatGPT, Bing, and Google’s Bard, have all been proven to repeatedly cite fake sources or statistics, make objectively false statements, and fail extensive fact-checking exercises. Some of the more notable examples of AI hallucinations from the past year include:

- Google’s Bard making a very public, $100+ million mistake about the James Webb telescope in its public launch promo materials.

- ChatGPT fabricating cases for lawyers attempting to cite legal precedent.

- All of the big three LLMs citing made up articles to support fictitious claims about the history of AI journalism.

While these examples were all high-profile enough to make the mainstream news, the truth is that discerning consumers can identify similar mistakes in regular day-to-day interactions with the technology. That’s not ideal in a school setting. Until the technology improves significantly (which, at the rate it’s going, might be as soon as a few months from now), we should not rely on any use of AI that results in direct instruction of facts.

3. Lack of nuance and/or context

There is significant overlap between this category and the previous two, but it’s important to remember that LLMs are language models, not subject matter experts. They are still prone to the pitfalls that come with the nuances of language, and they don’t always have the requisite “knowledge” to reconcile literal meaning with the necessary context.

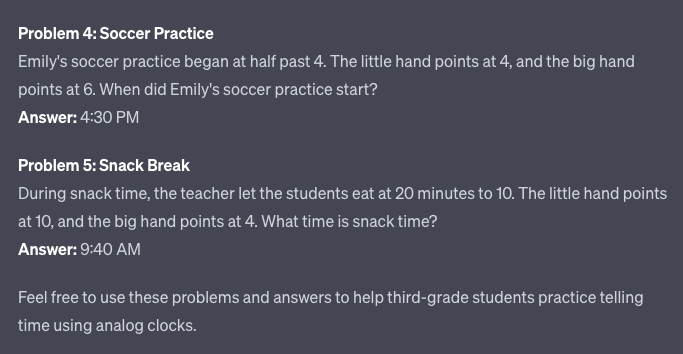

For this exercise, I asked Chat GPT to write me five math word problems about telling time using analog clocks. It started out promising, with a nice introductory instructional paragraph that mostly made sense. I thought it was interesting that it said “the little hand points to the current hour,” but chalked it up to an oversimplification. Then things got weird.

The first three problems it gave me were fine. But the last two were more problematic. As you can see in the screenshot below, ChatGPT was telling me that at 4:30, the little hand points at 4. This, of course, is not true—the little hand points exactly between 4 and 5, a seemingly small difference, but one that could be confusing for a student who doesn’t know any better. The problem made technical sense based on the stated premise in the instructional paragraph, but that premise lacked the required nuance.

The 5th problem was even more—forgive me—problematic. In this case, ChatGPT tried getting fancy by referring to “20 minutes to 10,” but misread its own statement and told me that the little hand points at 10 and the big hand points at 4. Imagine how confusing this would be for a third-grader. You can find similar examples of AI hallucinations all over the internet from math and science teachers who have sampled the technology.

What teachers can do about it

Language isn’t easy, and LLMs still largely lack the “critical thinking” skills needed to translate STEM problems or go beyond the literal meaning of certain phrases. Until those issues are ironed out, teachers will need to be especially vigilant to ensure that they and their students are not putting too much faith in these tools. Any use of these programs for assessment building, lesson planning, or other time-saving tasks should be reviewed by a qualified human being before getting put in front of kids.

When eSpark developed Choice Texts, for example, we knew we wanted to build a personalized experience for both fiction and non-fiction domains, but we found the existing tools to be unpredictable and unreliable in the realm of non-fiction. So, instead of crossing our fingers and hoping for the best, we built a much stronger fence around the Reading Informational texts. We looked for ways to give students the same choice and freedom of expression they valued in the Reading Literature activities, while also adding multiple layers of human vetting throughout the process. Our hope is that someday we’ll trust that students will not be exposed to disinformation, but we need to see more reliable responses first.

When using any “AI-powered” tools, vetting these kinds of things will be important. The closest comparison is probably the early days of Wikipedia, when many pages were stuffed full of uncited opinions and even outright lies. The very idea of citing a Wikipedia article as a source was laughable. Fast forward a few years, and it’s become one of the most credible sources on the Internet. AI hallucinations will likely not be as big a problem in the not-too-distant future.

As mentioned in previous installments of this series, the best thing teachers can do is loop in their technology departments whenever they find a new, appealing option. They will have the processes in place to ensure that all new products are safe, secure, and appropriately vetted.

Additional resources

- AI in Education: The Bias Dilemma

- AI in Education: Privacy and Security

- Feature Spotlight: Choice Texts

Want to stay in the loop on the topic of AI in schools? Subscribe to EdTech Evolved today for monthly newsletter updates and breaking news.