At MIND, we take great pride in our accountability with continuous research. As a result, we can share how effective ST Math is—and how critical it is to student math achievement—by demonstrating repeatable results at scale. But despite the proven success of ST Math, districts and schools may decide to stop using it.

There is no denying the significant improvement in grade-level performance when schools and districts implement ST Math in classrooms. The question is, would math scores drop when students stop using ST Math?

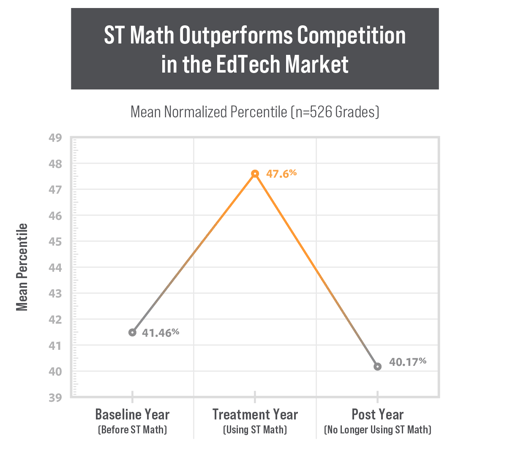

The answer: a resounding YES. (see chart below)

526 schools in 19 states* and the District of Columbia (grades 3, 4, and 5) used ST Math at least one year from 2005/06 through 2017/18. All stopped using before 2019/20 (missing data due to the Covid-19 pandemic)

Districts rarely remove a digital math component without replacing it with a competitor product. Any product adds its own average effect size—its own scale score points—to student scores, depending on use. So when ST Math is dropped, the scale score points it has been providing need to be replaced by what the new product delivers. The results of our studies covering 324 schools show that the replacement competitor math products cannot match ST Math’s effects, and school performance drops right back to the “average competitor” level.

When you compare the "Baseline Year" (before ST Math) to the "Treatment Year" (using ST Math), the installment of ST Math was associated with an increase of 6.14% in percentile ranking. But when you look at the change from the "Treatment Year" to the "Post Year" (no longer using ST Math), these same school-grades averaged a decrease of 7.43% in percentile ranking with ST Math's replacement. Both results were statistically significant.

These findings present a strong case for ST Math's efficacy and demonstrate by its absence how it is outperforming other competitor replacement products in standardized test math performance.

Many innovations were necessary to enable this sort of analysis of "what happens after." Every year, MIND collects publicly available math standardized testing data at the school-grade level and analyzes all new cohorts of ST Math users. These state math scores are normalized using z-scores and converted into normalized percentile rankings. This allows comparisons across years, exams, and states.

Unlike previous studies, this goes one step further in showing what happens after ST Math is no longer used. These findings from “before” to “after” not only show the positive effect of using ST Math on student math achievement but also signal a warning that math gains drop if students stop playing ST Math.

Taken together, MIND has run hundreds of studies, analyzing school-grades 3-5 using ST Math with fidelity (at least 85% student enrollment and at least 40% grade-mean progress completed by April 15) compared to a matched comparison group (having never used ST Math) via a quasi-experimental design (QED). Put simply, we compared differences in math scores before and after the implementation of ST Math.

Similar to this study, the comparison group can be assumed to have some other product that ST Math must outperform to show a positive effect—which it does, whether upon being installed or subsequent to being dropped.

Unlike previous studies, which show the boost from ST Math upon adoption and use, this goes one step further in showing what happens when ST Math is no longer used. These findings not only show the positive effect of ST Math on student math achievement but also signal a warning that math gains will drop if students stop playing ST Math.

All edtech products claim effectiveness, and it’s reasonable to assume that they add value to some degree when compared to “nothing.” The efficacy underlying every instructional program has some positive impact on student learning outcomes. Yes, it should come as no surprise that all edtech programs work.

The truth is that "evidence" can be generated and provided by any program. But we should be asking even more questions other than whether an edtech product is effective. Evaluating an instructional program requires a more comprehensive and nuanced lens—one we're more than willing to provide, especially when determining ST Math's efficacy.

With all this in mind, the underlying question remains: can most edtech programs achieve the same, better, or worse results than ST Math at scale?

The results of this study clearly show the relative effectiveness of ST Math. More importantly, it shows what will happen when students stop using ST Math.

Districts and schools need to realize the risk they are taking when replacing ST Math with another edtech product. When the mathematical achievement of all students is at stake, this is not a risk worth taking.

To learn more about why students who use ST Math see higher state scores, visit the link below.

*Arizona, California, Connecticut, Florida, Georgia, Iowa, Illinois, Indiana, Louisiana, Maryland, Montana, New Hampshire, New Mexico, Nevada, New York, Pennsylvania, Texas, Virginia, and Washington

Jessica Guise is a data analyst at MIND Research Institute. She just completed her two-year Strategic Data Project Data Fellowship at Harvard University.

Comment