This article features contributions from MIND's Education Research Director Martin Buschkuehl, and MIND's Chief Data Science Officer Andrew Coulson.

Over the past several years, MIND Research Institute has been endeavoring to change the conversation around edtech evaluations. MIND’s Chief Data Science Officer Andrew Coulson has made the case time and time again that the “one good study” paradigm is insufficient for edtech practitioners to base decisions on, and that a model of repeatable results at scale is one that should be adopted across the education industry.

Our latest ebook, Demanding More from EdTech Evaluations, outlined the problems that exist within the current landscape of edtech evaluation, and what can be done to provide more relevant efficacy information so decision makers can make much more valid, data-informed decisions on product fits for their teachers and students.

A few weeks ago, EdSurge published an article that echoed many of those same sentiments, most specifically that the overreliance on “gold standard” RCT studies is the wrong approach for edtech. In the article, titled “Debunking the Gold Standard Myths in EdTech Efficacy,” writers Jennifer Carolan and Molly B. Zielezinski suggest that edtech products should have an “efficacy portfolio.” This portfolio is a body of efficacy evidence that includes summative, formative, and foundational research. The article goes on to provide guidance about where an edtech company might focus their efficacy measurement efforts based on the maturity of their product and organization.

An efficacy portfolio is a collection of evidence gathered over time that captures different elements of whether and how a product is “working.” Some of the evidence may include a QED or RCT—but those approaches no longer bear the full weight of demonstrating efficacy.

-Jennifer Carolan and Molly B. Zielezinski

We are gratified to find that the model outlined by Carolan and Zielezinski is consistent with the approach MIND has been following and promoting for years. Here is what goes into MIND’s efficacy portfolio:

Our ST Math program is based on more than two decades of continuous development and refinement. It is grounded in neuroscientific and cognitive research, drawing from a wide range of domains including topics such as automatic and controlled processes (e.g., Fuster, 2004; Schneider & Chein, 2003), schemas and heuristics (e.g., Polya, 1959; Ghosh & Gilboa, 2014), embodied cognition (Tran, Smith, & Buschkuehl, 2017), and creativity and problem solving (e.g., Dietrich, 2004), to name a few. Our learning model is built upon a unique and patented approach to teaching mathematics through computerized means and the strategic utilization of informative feedback.

In a variety of ways, we continuously assess the usability and effectiveness of the ST Math program, and we provide educators, administrators and students with the tools to do so as well. The built-in report features of ST Math provide just-in-time data for strategically monitoring the teacher administration and student achievements within ST Math. MIND also provides districts with dose-correlation to assessments studies, and has partnered with other research organizations like PAST Foundation and WestEd for formative research on program adoption and more.

In the 2017-2018 school year alone, our product team and user experience researchers spent over 400 hours observing and interviewing ST Math educators and administrators, gathering feedback and data on how to continuously improve our program.

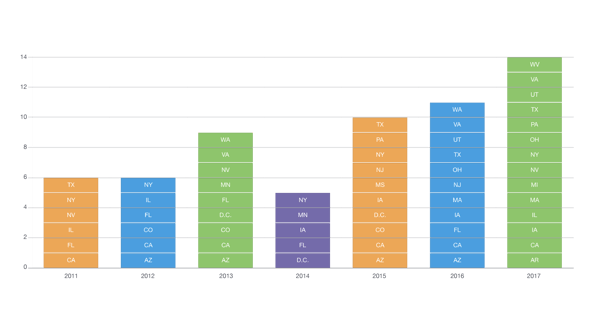

As part of MIND's efforts to make edtech research more accessible, and better equip educators and administrators to make informed decisions, we’ve created a repeatable, scalable study method that we have been continuously improving over a number of years. The MIND Data and Evaluation team performs multiple annual, transparent evaluations of results of all ST Math school cohorts. You can see studies from across the country on our Results page. In addition to our in-house efficacy studies, we have independent, third-party validation as well. One example—in March 2018, the independent research firm WestEd published its study on ST Math that was the largest of its kind to evaluate an education technology math program across state assessments. Two outcomes were evaluated: average math scale scores and the proportion of students who were proficient or above in math. For both measures, grades that faithfully implemented ST Math improved significantly more than similar grades that did not use the program. The effect size across all grades averaged 0.36, surpassing the Federal 'What Works Clearinghouse' criteria for "substantively important" effect.

In addition to our in-house efficacy studies, we have independent, third-party validation as well. One example—in March 2018, the independent research firm WestEd published its study on ST Math that was the largest of its kind to evaluate an education technology math program across state assessments. Two outcomes were evaluated: average math scale scores and the proportion of students who were proficient or above in math. For both measures, grades that faithfully implemented ST Math improved significantly more than similar grades that did not use the program. The effect size across all grades averaged 0.36, surpassing the Federal 'What Works Clearinghouse' criteria for "substantively important" effect.

We currently have an IES-funded RCT study that was reviewed in 2015 by What Works Clearinghouse (WWC) and found to meet ESSA Tier 2, as well as the ‘Meets Evidence Standards with Reservations’ level of the WWC standards. The 2018 WestEd Study is currently under independent review for both ESSA Tier 2 and WWC evidence requirements, and the results of that review are expected to be made public in June 2019.

We pledge to continue to promote a model of edtech evaluation that includes:

MIND is very encouraged by the recent EdSurge article and the signs that the landscape of edtech evaluation is changing. The health of the market depends on it, and it will catalyze a new paradigm for sourcing programs, and launch a continuous improvement cycle for edtech. While that change may not occur as quickly as we would like, progress is being made, and we will continue to provide a strong voice and to share pioneering methods and examples in the conversation. Our recent ebook, Demanding More from Edtech Evaluations, offers a deeper dive into our approach, and you can download it here. We also recently submitted our public comments on the priorities of the Institute of Education Sciences, which focused primarily on research and evaluation - you can read those here.

Brian LeTendre was the Director of Impact Advancement at MIND Research Institute. In addition to building thought leadership and brand awareness for MIND, Brian worked cross-functionally internally and externally to amplify MIND's social impact and accelerate our mission. He is an author, podcaster and avid gamer.

Comment