How Can You Give Student Feedback In Large Classrooms?

contributed by Rich Ross

Teaching courses with high enrollments (more than 200 students in a semester) is a daunting task for a variety of reasons — and giving feedback to students is particularly challenging. One-on-one conferencing is practically impossible, and it seems to me that many students learn just as much from the feedback that I give as they do from completing assessments. Subsequently, these courses pose the question: How can we give meaningful and rich feedback at such a scale?

I’ve been teaching the Introduction to Statistical Analysis course (STAT 2120) at the University of Virginia for a few years now, and with an enrollment around 500 students per term, there is a lot to do. All told, students typically submit about 60 assignments in the class. I’m fortunate to have the support of more than a dozen graders and two graduate students for the course; even so, grading so many assignments for hundreds of students can take a lot of time.

Here are three strategies I use in my course to make this process meaningful rather than tedious. All three strategies focus on providing students with useful and detailed feedback on their work.

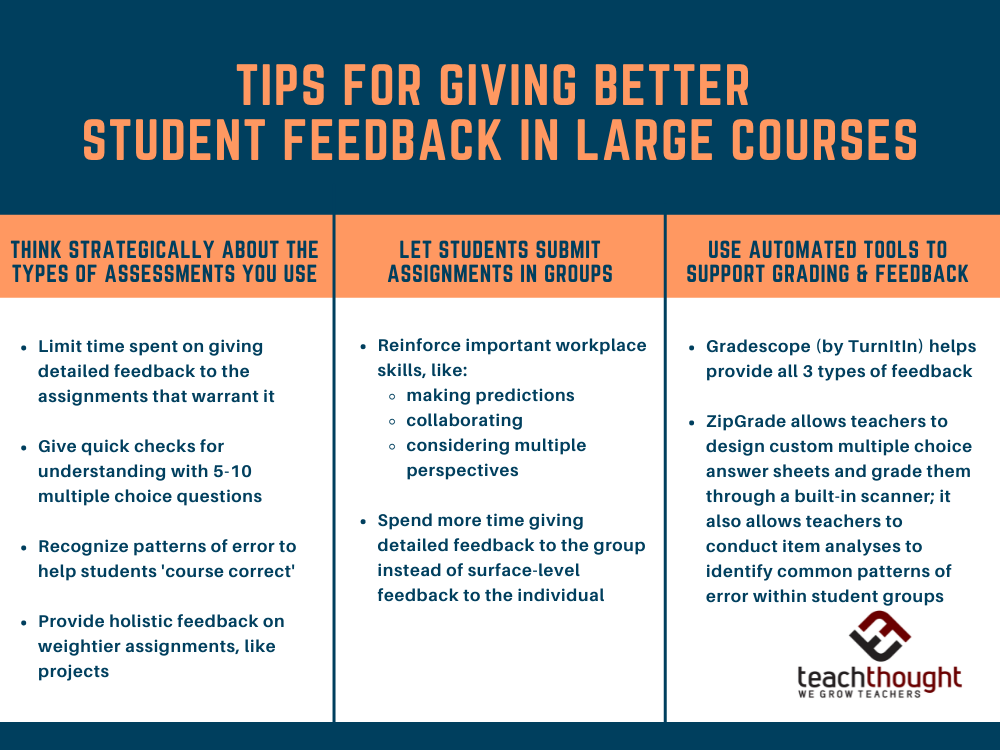

1. Think strategically about the assessments you use and necessary feedback

Not every task or assignment calls for detailed feedback. By strategically matching the depth of feedback you give to the type of learning task or assessment, you can limit the time you spend on providing detailed feedback to the kinds of assignments that most warrant it.

In my course, I give students three different types of effective learning feedback:

(1) Quick checks for understanding. Often after a lecture or a reading assignment, I just want to make sure students are following along OK: Do they understand the concept? Are they on the right track? To do this, I’ll give short quizzes with five to 10 ‘select all’ or multiple-choice questions. Many students finish these assessments in five minutes or less, and they get instant feedback on whether they’re right or wrong for each question.

With these brief assessments, I don’t walk students through what the correct answer is. Instead, it’s the students’ responsibility to make sure they understand what they did wrong and to correct their misunderstandings. Students can do this by reaching out to another team member—a fellow student, a teaching assistant, or even me during office hours. This encourages them to interact with each other and with the course staff and reinforces the idea that each student is accountable for their own work.

(2) Recognizing patterns of error. For problem sets and longer quizzes at the end of a chapter, I’m looking to help students understand the kinds of errors they might be making, so they can ‘course correct’ as soon as possible. In giving this type of feedback, I’ll create and post a scoring rubric that shows the correct answer and explains the different types of mistakes students commonly make on each question—as well as how many points have been deducted for each type of error.

For example, on one of our first assignments, we give students a set of values and ask them to use software to compute and then report several values that fit into three categories: the mean (average value), the standard deviation (a measure of how much variation is present in the values), and the five-number summary (a set of numbers that divide the values into four “chunks”). On a question like this, the main patterns of error might be obvious: Some students don’t report the mean. Some don’t report the standard deviation. And some don’t report the five-number summary.

Recognizing patterns of error allows us to help students identify the part(s) of the problem-solving process that are especially prone to mistakes. In this example, my feedback can be distilled into smaller sets of comments for each error type. Each set of comments is broadly applicable for all of my students, while still speaking directly to their error. I can save time by giving the same feedback for each error type, which allows more time to interact with my students instead of interacting with the gradebook.

(3) Holistic feedback. For projects and other weightier assignments, I generally want to give more detailed feedback that is unique to each submission. However, I’m able to do this because I have saved time in giving feedback for other kinds of assignments.

2. Let students submit assignments in groups as appropriate.

There are many benefits to having students work together and submit a single project or piece of work for evaluation. For one thing, this helps students learn how to collaborate on tasks and assignments, which is an important workforce skill—and it also allows them to learn from each other. But it benefits the instructor and course graders as well because it allows us to spend more time giving detailed feedback for each group instead of providing surface-level feedback for three to five times as many submissions.

One of our group activities requires students to record the results of flipping a coin many times. Working in a group, students first predict the outcome: how many heads and tails will occur, how many heads in a row, and so on. Then, they can combine the results of their flips so they don’t have to spend as much time in class flipping a coin. At the end, the students discuss each other’s predictions and make a final set of conclusions as a group. Each person is exposed to several ideas, learns from their own experiences as well as from others, and gets the chance to make appropriate conclusions based on the questions in the activity—and the graders have the luxury to dive a little deeper when giving feedback, as grading 120 submissions is much less daunting than grading 500.

3. Use an automated tool to support the grading and feedback process.

We use a tool called Gradescope by Turnitin to help with grading in STAT 2120, and it helps us to provide all three of these kinds of feedback.

For instance, Gradescope automates the scoring of student ‘checks for understanding.’ It also simplifies the process of categorizing errors for students by using machine learning technology to group student responses according to the type of error they make, and it helps instructors give holistic feedback on projects and assignments by applying rubrics throughout a piece of work.

With the right technology, we don’t need to rewrite the feedback we give to students hundreds of times. This tool allows us to spend less time giving the same feedback many times in a row, so we can spend more time interacting with students and helping them synthesize course ideas. We aren’t replacing the human element in the grading and feedback process; on the contrary, we’re amplifying it by letting automation handle the repetitive tasks so that we can use our time more wisely.

One of the key questions I often grapple with in my large course is: How can we create a more meaningful experience for students? Although administering exams and giving feedback in a large course can be challenging, I’ve found that these strategies and technologies improve the course experience without sacrificing substance.

Richard Ross is an Assistant Professor in the Department of Statistics at the University of Virginia. See details about how he uses Gradescope for large classes and collaborative work here.